Back to Portfolio

BitDrones

Note: This research work took place over the course of several years (and multiple development cycles). It has been modified for brevity and clarity.

Summary

The stated intent of the project was to make “magic levitation”. The exact form this would take was left open for interpretation. This system is an interactive 3D shape changing display. At its core, a programmable matter system.

This is an emerging field. The greater majority of work on shape changing interfaces has, and continues to, focus on supported display mechanisms. That is, the elements of the system have some form of secondary support enabling their position. The most common form of these are so-called 2.5D systems, where part of one “D”, often the table, is constrained as a platform for mechanization. These systems are useful, but in order to develop and understand interactions in a fully 3D system, each element must be self-levitating.

Self-levitating systems are capable of unrestricted movement in all three dimensions, either independently or through input from the user. The goal of this project is to support both. Additionally, individual voxels (3D pixels) were required to be capable of displaying an RGB color.

Process and Role

First principles design research in the interaction space is founded upon the process of determining an area of research (shape changing displays), then developing a novel technological platform for that area (indoor safe-to-touch drone swarm), then developing interactions for that system (touch-to-drag).

Once the area of research and technological platform are determined, the sub-process of technological and interaction design happens. Especially with novel systems, these operate hand-in-hand as capabilities determine interactions, and interactions determine system design parameters.

My role in this project evolved over time, from a contributor, to lead interaction designer, system architect and primary developer. For this project I developed skills in working with drones, local positioning systems, control systems, and real time systems. I was able to utilize my previous experience with software architecture and interaction design.

The drone units were built by a colleague Calvin Rubens, and then broken by myself. The research was under the direction of Roel Vertegaal (http://humanmedialab.com).

Additional researchers on this project include:

Antonio Gomes

Xujing Zhang

Research and Design

Technical Development

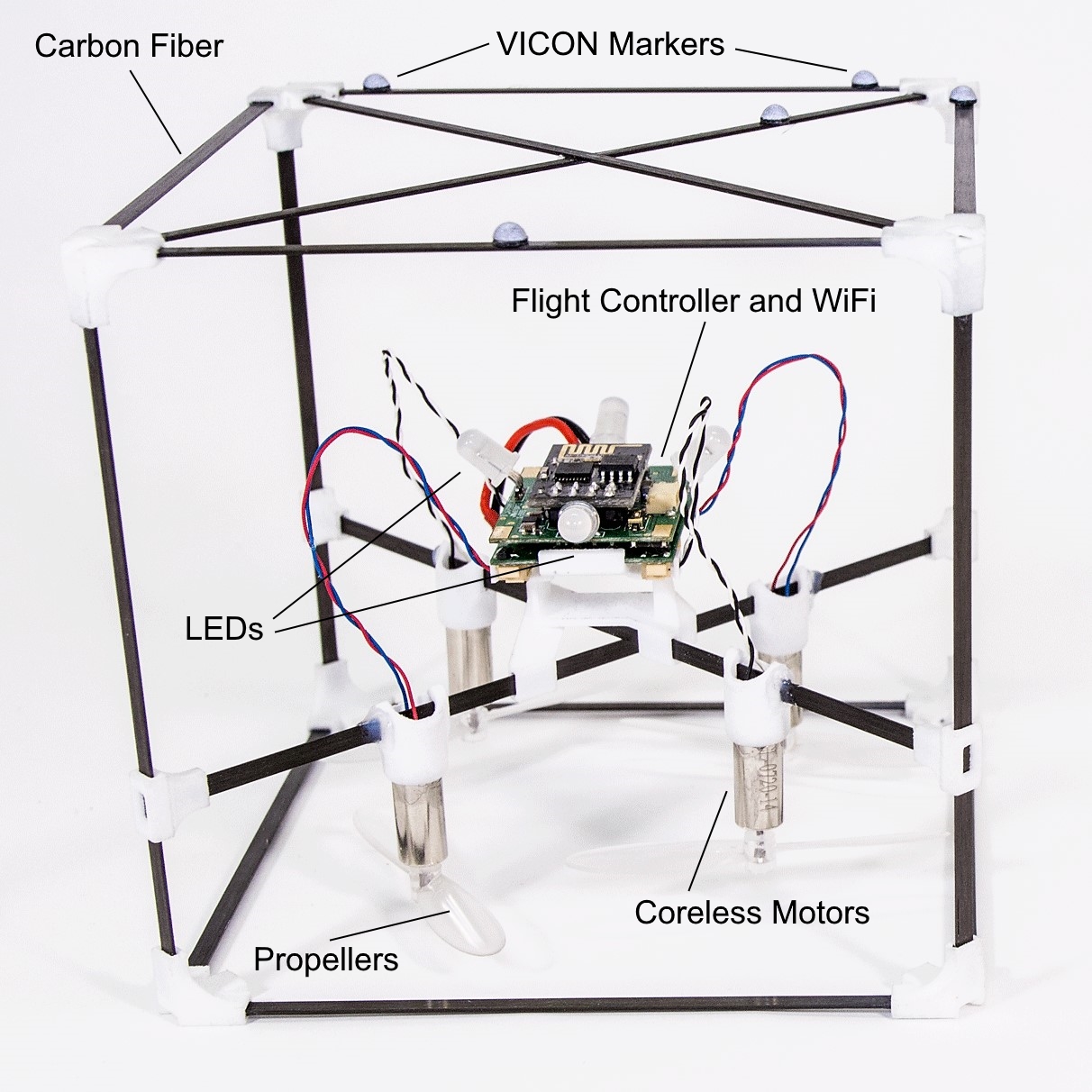

The system utilizes quad-rotor micro-drones. Each is housed within a wireframe cage allowing safe touching and manipulation. Additionally, each is equipped with a lightweight LiPo battery for power, color changing LED for display, and WiFi radio for communication and control.

Technical Challenge: Control and Positioning

Small drones, operating indoors, cannot utilize GPS systems, as they are neither accurate nor light enough. The most-viable method of control for <50g drones was a Local Positioning System (LPS), which would connect to a system running individual PID-based controllers for each axis of the drone’s position.

The system utilized a Vicon system, with retroreflective markers being placed on each frame. This would feed the position at a rate of 100Hz to the control system, which would in turn send control instructions to the individual drone systems over a wireless network.

Technical Challenge: Small Drones

In order for the units to form individual pieces of matter in a programmable matter display, they were designed to be as small as possible, while still maintaining a flight time of at least five minutes. Motors, propellers, and batteries all become less efficient per gram at smaller sizes. This resulted in a tradeoff sized unit where the individual drone could be grabbed by the average hand.

Technical Challenge: Testing With Real Systems

Interaction design must, fundamentally, be tested “in the real”. This meant that the drone system was operational, in some capacity, for the majority of development. Of course, there were instances where the system would fail, and the drones would lose control. This was a serious challenge, and was overcome by hardening the flight control and response systems first.

Interaction Design Development

Standard Interactions Translate

Intuitive gestures with the drone system such as tap-to-select, drag-to-reposition, and two-hand-drag all translate naturally to the 3D interaction space.

Natural Discovery: Built-In Elasticity / Force Feedback / Physics

Pushing on a drone that is doing its best to stay in a given position gives a sense of force-feedback that is intuitive to users. Following, then, that if we allow a user to move a drone, its resistance is limited as its “new desired position” is updated continuously as the user moves it.

Natural Discovery: Play

Unencumbered by the fear of larger, more powerful drones, and with their components safely shielded away, participants of all ages found great pleasure in simply “playing” with the drones. This was an obvious phenomenon witnessed in all demonstration scenarios. Users would enjoy moving, dropping, and tapping the drones.

Secondary Interactions

Certain interactions (such as color picking), a higher resolution display was required. For this, a smartphone was used with a simple android application as a stand-in for a user’s cell phone. For telepresence, or remote collaboration, a larger drone which had a flexible display was used, along with a video calling application.

An additional interaction mechanism was developed for remote interactions with the drones. A wand that could be tracked by the local positioning system. Interactions with this device included ray-select, lasso-select, point-and-click, click-and-drag (remote).

Documentation and Demonstration

Research Papers, Video and In Person Demonstration Events

The research papers for this system contained detailed information on the system construction and the interactions presented. The interactions were best presented in a video or picture format, where the configuration of the system could be seen.

Logistically, preparing and moving a drone system for demonstration is no small task. The system is, technically, “portable”. For it to be moved, a local positioning system must be either moved with it, or made ready at the other end. The drones themselves are relatively fragile and must be carefully packed. LiPo batteries are dangerous and must be transported carefully.

In the demonstration location, power and space are required. Additionally, as most LPS systems rely on infrared, there cannot be too much ambient sunlight. Finally, WiFi congestion can cause issues with the drone communications.

Lessons Learned

A uniformly top-down control system was quicker to develop and debug. But, a system with distributed control would have been more robust. It would also be able to take advantage of dead-reckoning to handle intermittent positioning information loss.

A system to dock and charge the drones would have decreased the time spent swapping and managing batteries. Combined with a landing routine, this would have made the system completely automated.